The economy of artificial intelligence has been a hot topic late, claiming the eye -opening economies in the deployment of startupdups AIGPU chips.

Two can play this game. On Wednesday, Google announced its latest open source large language model, JEMA 3, with a part of the estimated computing power approaching the accuracy of Deep Sak’s R1.

Using a joint measurement system used for chess and athletes classification, Google claims that GEMMA comes within 98 % of the 3 depapseek scores, 1338 vs 1363 R1.

Nos: First Gemini, now Jema: Google’s new, Open AI Models Target Developers

This means that R1 is better than Jima 3. However, based on Google’s estimates, Search Dev claims that it will take 32 of the “H100” GPU chips in the mainstream of NVIDIA to get the R1 score, while GEMMA 3 has used only one H100 GPU.

The company claims that the balance of Google’s computer and the Yellow Score is a “sweet location”.

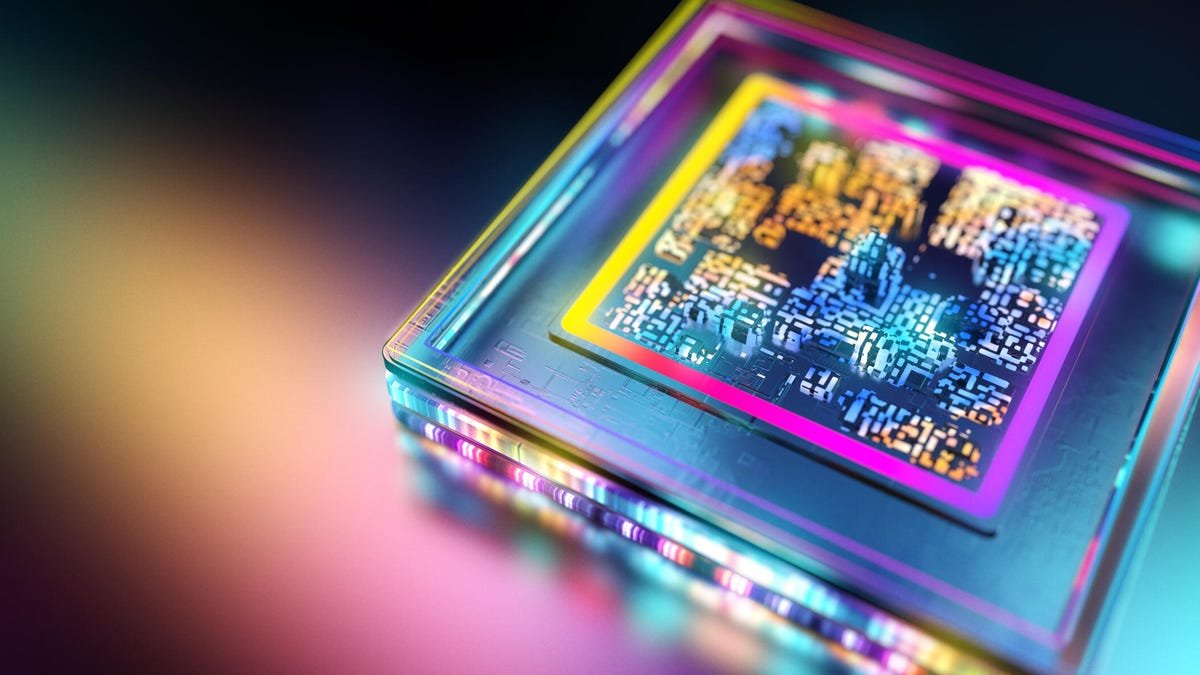

In a blog postGoogle has billed the new program as “can run on a single GPU or TPU”, referring to the company’s customs AI chip, “tanker processing unit”.

“JEMA 3 offers the latest performance for its size, improves the Lama 405B, DiPsic-V3, and O-3-mini in the early human preferential diagnosis on the Lamrina Leader Board,” referring to the Blog Post, referring to the LO Scores.

“This helps you create engagement user experiences that can fit on a single GPU or TPU host.”

Google’s model is also at the top of the Meta -3 Lama -3 Yellow Score, which is estimated to require 16 GPUs. (Note that the number of H100 chips used by competitiveness is Google estimates Deep Deptic AI Has only revealed an instance To use 1,814 of NVIDIA’s less powerful H800 GPUs in server responses with R1).

More detailed information provided A developer blog post on hugsWhere Jema 3 storage is offered.

JEMA 3 models, which are intended for the use of on -device instead of data centers, have very few parameters, or nerve “weight” than R1 and other open source models. Generally, the higher the number of parameters, the more computing power is needed.

Nos: What is DPSK AI? Is it safe? Here’s everything you need to know

The Jima code offers the parameter, which, according to today’s standards, has a very low number of 1 billion, 4 billion, 12 billion, and 27 billion. On the contrary, the parameter count in R1 is 671 billion, of which it can select 37 billion by ignoring parts of the network or shutting down 37 billion.

The main addition to making such performance possible is a widely used AI technique called attachment, under which a large model trained model is extracted from this model and is inserted into a small model, such as JEMA 3, so that it gives better strengths.

The sleeve model is also operated through three different quality control measures, including human impression (RLHF) learning to create the production of GPT and other major language models, including reinforcement. Also, learning reinforcement from machine feedback (RLMF) and learning reinforcement from implementation opinions (RLEF), which Google says to improve the model’s mathematics and coding capabilities, respectively.

Nos: the best for coding AI (and not to use – including Dipisic R1)

A Google Developer Blog Post details these methodsAnd a separate post describes the smallest version for mobile devices, the 1 billion billion models used to improve the technique. These include four common AI engineering techniques: updating quantization, “key value” cache setting, better time of loading of some variables, and “GPU weight sharing”.

The company compares not only ELO Scores but also Jima 2 before Jima 2, and its closed source from gymnasium models that are on benchmark tests such as Liu Codech programming task. JEMA 3 usually falls below the accuracy of Gemini 1.5 and Gemini 2.0, but Google calls the results remarkable, which states that Jema 3 is showing competitive performance compared to the closed Gemini model. “

Gemini model is much larger in the counting of parameters than Jima.

The central progress of more than JEMA 2 is a long “context window”, which has the number of input tokens that can be remembered to work at any time.

Jema 2 was only 8,000 tokens while Jema 3 is 128,000, which is considered as a “long” context window, which is better suitable for working on entire papers or books. (Gemini and other closed source models are still very capable, with 2 million tokens of Gemini 2.0 Pro with context.)

Jema 3 is also a multi -modal, which was not JEMA 2. This means that it can handle the pictures with the text to present the answers to the questions such as, “What is in this picture?”

And, last, Jima 3 supports more than 140 languages instead of English support only in Jima 2.

Nos: What does a long context window mean for an AI model like Gemini?

Many other interesting features have been buried in fine print.

For example, a well -known problem with all major language models is that they can memorize parts of their training data set, which can lead to violation of information and confidentiality if the model is tapped using malicious techniques.

Google researchers took the training data samples and found out how much direct Jima 3 could be removed from its other models. He noted, “We know that JEMA 3 models memorize the long -shaped text at a very low rate than the previous models,” he notes, which theoretically means that the model is less at risk of information leakage.

Can read more technical detail people read JEMA 3 Technical Paper.